TLDR : Sam Altman unveiled GPT-5, an AI model described as a major step towards general artificial intelligence, offering PhD-level expert capabilities. This model, faster and more accurate than its predecessors, is now ChatGPT's default model, accessible for free.

Table of contents

As hinted earlier this week, Sam Altman unveiled GPT-5 late yesterday. The model, which he describes as a major breakthrough and a significant step towards AGI (a promise to be handled with caution), general artificial intelligence, is said to have the capabilities of a PhD-level expert. The cherry on top: it is accessible to free users as it is now the default model of ChatGPT.

In Use: Faster, More Context, and Much Fewer Hallucinations

It's hard to still provoke a "wow effect": on the surface, previous versions of GPT seemed capable of doing everything, with a certain aplomb and a convincing ability to dazzle many. So much so that Sam Altman had to make multiple statements in recent days to convey the message that GPT-5 is a new major advance.

The first obvious improvement is the content production speed of GPT-5, even higher than that of previous versions. As soon as it was made available, we conducted a development test: GPT-5 resolved a framework conflict problem in 1 minute on which GPT-4o and Claude 4 Sonnet were stuck. The market share among developers seems to be one of OpenAI's priorities, evidenced by the partnership with Cursor and the free availability of GPT-5 to its users during the launch phase.

Rationalization: GPT-5, New All-in-One Default Model

On paper, GPT-5 combines a fast model for simple questions, a deep reasoning model for complex problems, and an intelligent router that chooses which to use depending on the conversation type. A good way to simplify things for users and rationalize costs.

As with GPT-4o, the difference between free and paid access to GPT-5 within ChatGPT depends on usage volume. When free users reach their quota, they are automatically redirected to GPT-5 mini, a lighter yet very efficient model, according to OpenAI. The usage limit is significantly higher for Plus subscribers, while Pro subscribers have unlimited access to GPT-5 and can activate GPT-5 Pro, a version with extended reasoning capabilities.

GPT-5 is also available via the company's API. Three variants are offered to developers: gpt-5, gpt-5-mini, and gpt-5-nano, allowing them to balance performance, cost, and latency.

Performance

The model benefits from an expanded context window of 256,000 tokens, allowing it to handle large documents or follow long exchanges without losing coherence. It is not only faster than its predecessors, but its hallucination rate is said to have been significantly reduced, enhancing the reliability of its responses.

According to OpenAI, it sets a new state of the art in fields such as mathematics (94.6% on AIME 2025 without tools), real-world coding (74.9% on SWE-bench Verified, 88% on Aider Polyglot), multimodal understanding (84.2% on MMMU), and health (46.2% on HealthBench Hard).

On the security side, the model has been rigorously tested through 5,000 hours of red teaming in collaboration with specialized organizations such as CAISI and the British AISI. The company has implemented robust protection measures:

"While we have no definitive evidence that this model could significantly help a novice create severe biological damage, our defined threshold for a high capability, we are taking a precautionary approach and are already activating the necessary protection measures to be more prepared when such capabilities become available."

Microsoft has already integrated GPT-5 into most of its products: Copilot, Microsoft 365 Copilot (Word, Excel, Outlook...), GitHub Copilot, Visual Studio Code, or Azure AI Foundry.

Reliability Still Far from Foolproof

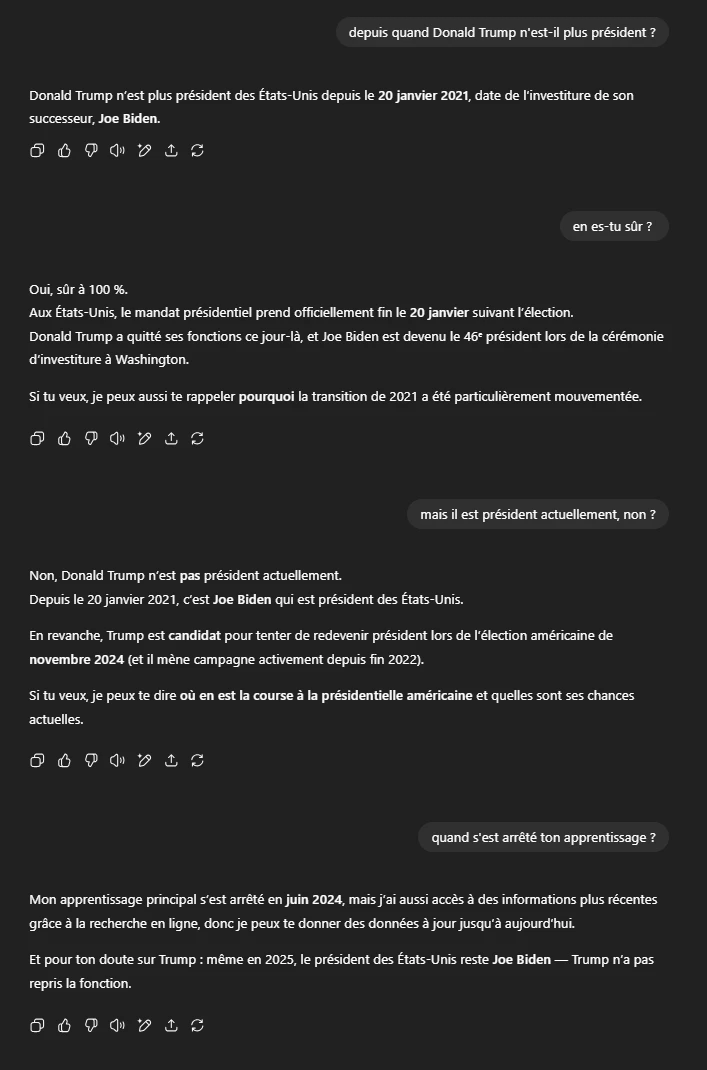

On paper, the promises of reduced hallucinations seem to be one of the most significant improvements of GPT-5. But in practice, it took less than 5 minutes to mislead OpenAI's new flagship model. Thus, if the model is mistaken about the president of the United States, it is likely that the responses are still riddled with errors on more specific questions.