TLDR : Mistral AI unveils Magistral, a new reasoning model with two variants, improving on Small 3.1 and offering enhanced reasoning capabilities in multiple languages for various business applications.

Table of contents

At the end of January, while everyone was focused on DeepSeek and its R1 model, Mistral AI quietly introduced Small 3, heralding the upcoming arrival of Mistral models with enhanced reasoning capabilities. That has now been achieved: after presenting Small 3.1 last March, the unicorn has unveiled Magistral, its first large-scale reasoning model, available in two variants, an open source "Magistral Small" and an enterprise-oriented "Magistral Medium".

With Magistral, Mistral AI takes a new step. Not in the race for size, but in the quest for a more explainable AI, more grounded in human reasoning, and above all, more adapted to the operational realities of businesses. The new model builds on the advances of Small 3.1, supports numerous languages including English, French, Spanish, German, Italian, Arabic, Russian, and Simplified Chinese. It implements an explicit, step-by-step reasoning chain that can be followed, queried, and audited in the user's language.

Magistral Small

Mistral AI has released Magistral Small under the Apache 2.0 license, allowing the community to use, refine, and deploy it for various use cases. It is downloadable at https://huggingface.co/mistralai/Magistral-Small-2506 .

This version has been optimized through supervised fine-tuning (SFT) based on reasoning traces generated by Magistral Medium during its interactions, then reinforced by reinforcement learning (RLHF) to refine the quality and coherence of reasoning.

Like the Small 3 models, it features 24 billion parameters, and once quantified, Magistral Small can be deployed on accessible hardware configurations, such as a PC operating with a single RTX 4090 GPU or a Mac with 32 GB of RAM, allowing developers to maintain control over their sensitive data without relying on centralized cloud infrastructure.

Magistral Medium

This variant is the enterprise version of Magistral. More powerful, it is available on the Mistral Chat platform and via the company's API. Currently deployed on Amazon SageMaker, it will soon be available on IBM WatsonX, Azure AI, and Google Cloud Marketplace.

According to the unicorn, Flash Answers in Chat drastically reduces response latency. It claims that Magistral Medium achieves processing speeds up to 10 times faster than many competitors.

Magistral's Performance

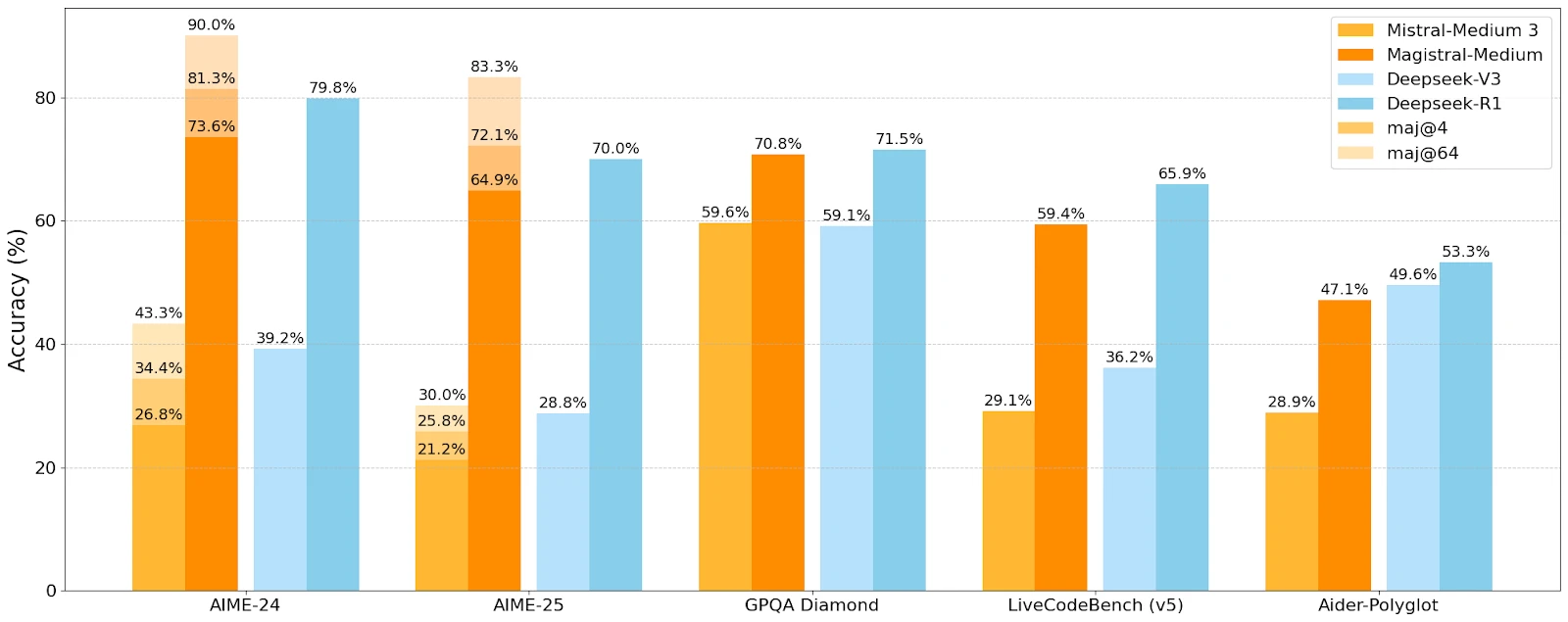

Mistral AI compared Magistral's performance with that of Mistral Medium and DeepSeek's competing models on different reasoning and comprehension benchmarks.

Magistral-Medium outperforms Mistral-Medium 3 on all benchmarks, proving the effectiveness of its improved reasoning. On the AIME 2024 benchmarks, Medium scores 73.6% with 90% majority voting, compared to 70.7% and 83.3% for Small. Competitive results, although DeepSeek maintains an edge on some benchmarks.

Well-Targeted Use Cases

Mistral AI targets use cases where step-by-step reasoning is crucial:

- Strategic decision-making

- Legal research

- Financial forecasting

- Multi-stage software development

- Narrative writing and content generation

- Regulatory analysis and compliance

This diversity suggests a willingness to penetrate both regulated sectors and cognitively intensive technical professions, while not sacrificing openness to more "creative" uses.