TLDR : The international "ICHIBAN" mission led by the German Aerospace Center (DLR) and the Japan Aerospace Exploration Agency (JAXA) successfully facilitated communication and cooperation between two astronaut assistance robots aboard the ISS. This achievement, opening prospects for robotic interactions in orbital environments, marks a significant step forward for future lunar and Martian missions.

The German Aerospace Center (DLR) and the Japan Aerospace Exploration Agency (JAXA) announce that the international cooperation mission "ICHIBAN" has been successful. For the first time, two astronaut assistance robots from different national programs communicated and cooperated in orbit aboard the ISS.

One of the objectives of the ICHIBAN mission (meaning "first" in Japanese), which concluded on July 29th, was to test the coordination of several distinct robots operating simultaneously and in real-time aboard the ISS.

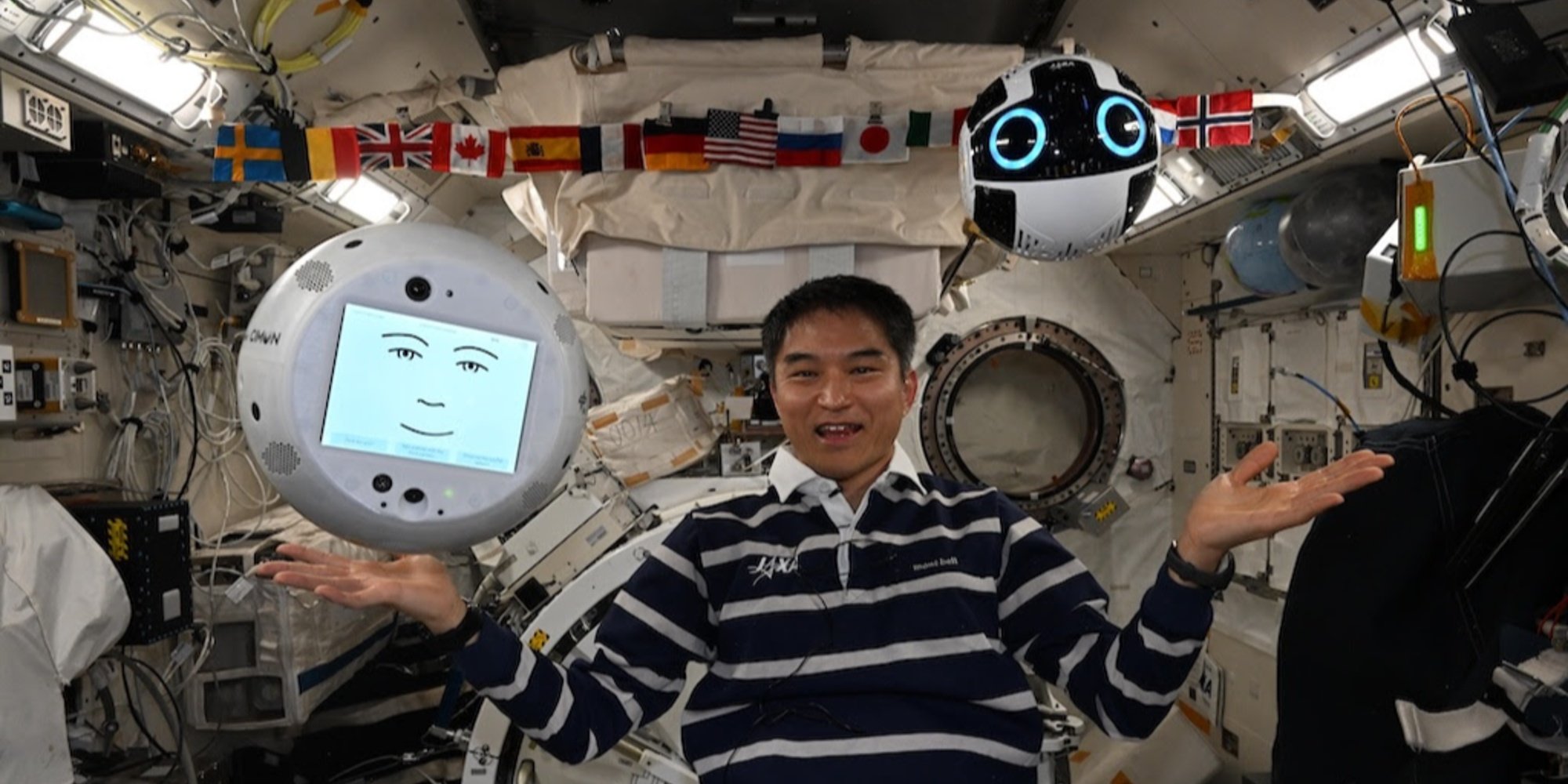

The two robots involved in the experiment are CIMON, developed by DLR, Airbus, and IBM, and Int-Ball2, designed by JAXA, installed respectively in the European Columbus and Japanese Kibo experiment modules.

First deployed in 2018, CIMON (Crew Interactive Mobile Companion) is a spherical robot, the size of a ball, 3D printed, powered by IBM's watsonx AI platform's vocal and cognitive technologies. During the ICHIBAN mission, CIMON was operated by BIOTESC, the Swiss ESA center specializing in scientific operations aboard the international station.

Meanwhile, Int-Ball2 is an evolution of JAXA's first camera drone. Integrated since 2023 in the Japanese module, it allows the ground control unit in Tsukuba (Japan) to remotely film astronauts' activities, thereby optimizing scientific documentation without engaging the international space station crew. Until now, no interaction capability with other robots had been considered.

The demonstration was conducted by Takuya Onishi, a JAXA astronaut aboard the ISS. From the Columbus module, he transmitted voice commands to CIMON, which served as an intelligent linguistic interface. The instruction was processed by IBM's watsonx platform before being translated into operational commands sent to Int-Ball2.

The latter then navigated through the Kibo module to search and locate various hidden objects: a Rubik's Cube, a hammer, several screwdrivers, and an out-of-service version of Int-Ball. It then transmitted live images to CIMON's screen, allowing Onishi to visually verify their position remotely.

Credit: JAXA/DLR;

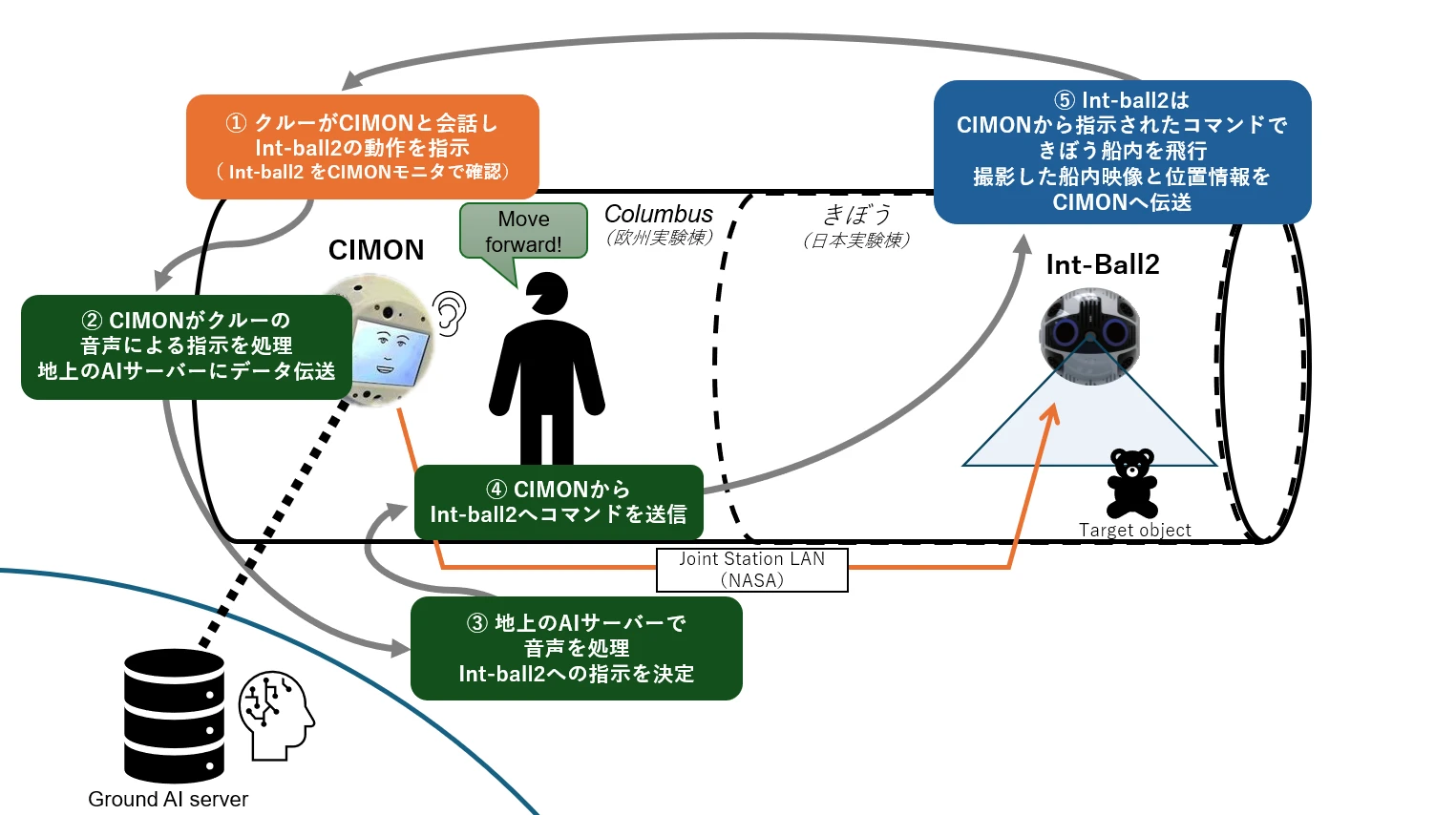

Illustration of the ICHIBAN Mission Collaboration Board

Illustration of the ICHIBAN Mission Collaboration Board

The astronaut gives a voice instruction to CIMON for Int-Ball2 to perform a task (step ①). CIMON transmits this voice command to a ground AI server, which analyzes the command's intent (steps ② and ③). Instruction feedback to the ISS: once processed, the command is sent back from the ground via the station's LAN (Joint Station LAN/NASA) to CIMON, which forwards it to Int-Ball2 (step ④). It then acts accordingly in the Japanese "Kibo" module, performs its task (e.g., locate an object), and sends the images and position data back to CIMON.

The innovation lies in the fact that, until now, images captured by Int-Ball2 could only be transmitted to the Tsukuba control center. The ability to send them in real-time to another robot on board opens a new field for distributed robotic interactions in the orbital environment.

A first step that holds significant importance in light of future lunar and Martian missions.