Ilia Sucholutsky and Matthias Schonlau, both researchers at the University of Waterloo, Canada, have developed a new method to make machine learning more effective, even with limited datasets. They worked on an algorithm capable of learning several concepts from the same image, and thus with one image, or even less, per category. Their work has been published in an article entitled ‘Less Than One’-Shot Learning: Learning N Classes From M<N Samples and available on arXiv.

The technique, called “less-than-one-shot learning,” trains an AI model to accurately identify more objects than the number it was trained on – a huge change from the costly and time-consuming process that currently requires thousands of examples of an object for accurate identification.

“More effective machine learning and deep learning models mean that AI can learn faster, is potentially smaller, and is lighter and easier to deploy,” said Ilia Sucholutsky, a PhD student at the University of Waterloo and lead author on the study introducing the method.

“These improvements will unlock the possibility of using deep learning

in contexts where previously we could not because it was simply too costly or impossible to collect more data. »

“As machine learning models begin to appear on IoT devices and phones, we need to be able to train them more effectively, and less-than-one-shot learning could make this possible,” said Ilia Sucholutsky.

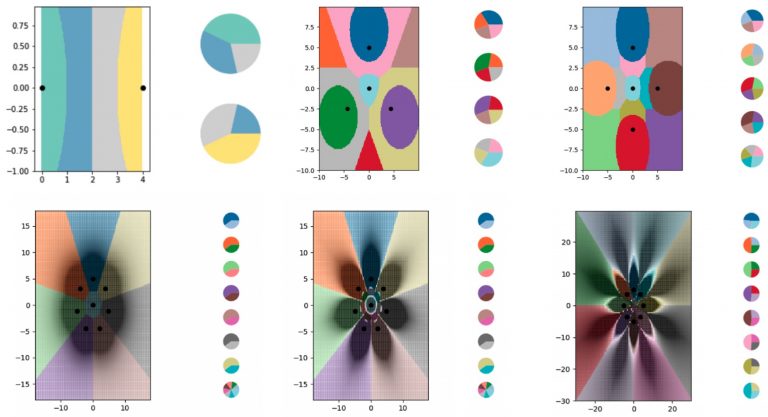

The less-than-one-shot learning technique builds on earlier work on soft labels and data distillation carried out by Sucholutsky and his supervisor, Professor Matthias Schonlau. In their work, the researchers succeeded in training a neural network to classify handwritten 10-digit images using only five ‘examples of carefully designed soft labels’, which is less than one example per digit. Applying their technique to the MNIST dataset, they achieved 94% accuracy with only 10 images (created from the original 60,000 images).

To develop this new technique, the researchers used the k-nearest neighbours (k-NN) machine learning algorithm, which ranks data according to the element to which they are most similar. They used k-NN because it makes the analysis more manageable, but less-than-one-shot learning can be used with any classification algorithm.

By using “k-nearest neighbours”, the researchers show that it is possible for machine learning models to learn to discern objects even when there is not enough data for some classes. In theory, this can work for any classification task.

“Something that seemed absolutely impossible at first turned out to be possible,” said Matthias Schonlau of the University of Waterloo. “It’s absolutely amazing because a model can learn more classes than you have examples of, and that’s thanks to the soft label. So now that we’ve shown that this is possible, work can begin to determine all the applications. »

A paper detailing the new technique, ‘Less Than One’-Shot Learning: Learning N Classes From M<N Samples ‘ by Waterloo Mathematics Faculty researchers Ilia Sucholutsky and Matthias Schonlau, is being reviewed at one of the leading AI conferences.

Translated from Des chercheurs de l’Université de Waterloo présentent le Less Than One-Shot Learning pour créer des modèles sur des datasets limités