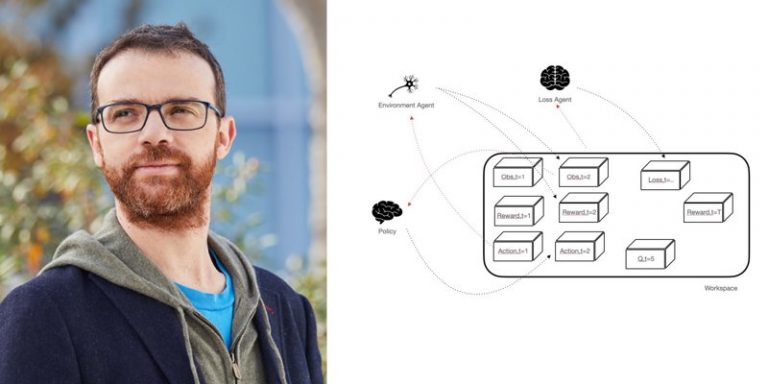

Last month, FAIR presented SaLinA, a lightweight library using an agent-based approach to implement sequential decision models, including (but not limited to) reinforcement learning algorithms. To find out more, we spoke to Ludovic Denoyer, research scientist at FAIR Paris – previously a professor at Sorbonne University – and author, along with Alfredo de la Fuente, Song Duong, Jean-Baptiste Gaya, Pierre-Alexandre Kamienny and Daniel H. Thompson of SaLinA.

ActuIA: What is the SaLinA bookshop?

Ludovic Denoyer: The SaLinA library is a lightweight library that can be seen as an extension to PyTorch, allowing sequentiality to be taken into account in the information processing process. It adds to PyTorch the ability to naturally take into account the temporal dimension in data and processing and provides a framework for the implementation of sequential decision systems, particularly reinforcement learning algorithms.

What is the interest of the agent-based approach for this library?

Concretely, where PyTorch allows to compose ‘modules’ in order to build complex neural networks, SaLinA allows to compose ‘agents’ in order to build complex agents, an agent corresponding to a sequential model. Moreover, where PyTorch establishes a clear separation between model and data (by using different classes such as DataLoader), SaLinA completely unifies these two aspects, data as well as predictions being generated by agents. For example, it is an agent that takes care of loading data from a dataset into memory, or it is also an agent that models a learning environment.

This unification has two advantages: it greatly simplifies the understanding of the codes produced (only one abstraction), and it allows to model complex learning frameworks, such as model-based RL or batch-RL, which can be treated simply by changing the agents involved in the learning process, without changing the algorithm itself. We thus obtain a clear separation between the learning algorithm and the architecture of the models involved in this learning (as PyTorch allows in classical deep learning), which is a major characteristic of the library, where other reinforcement learning libraries usually propose specific algorithms for certain architectures.

Concretely, all the algorithms we provide with SaLinA work with perceptrons, recurrent neural networks, transformers, without the need to change the algorithm code.

Despite the strong influence of reinforcement learning in the design of SaLinA itself, its use is not limited to RL. What are its possible uses?

Today, two worlds coexist: the proponents of supervised learning who model their decision processes through so-called “atomic” functions learned from data sets, and the proponents of reinforcement learning who model sequential processes in interaction with the environment. SaLinA creates a bridge between the two worlds, allowing for example the development of sequential algorithms on data sets, or on mixtures of environments and data.

From the point of view of supervised learning researchers, it opens the possibility of developing more sequential algorithms (e.g. cascade models, or complex attention models). For reinforcement learning researchers, it facilitates the use of data (e.g. trajectories collected on users), and thus increases the field of possibilities. Moreover, everyone can use the same library, and the exchange of information between communities is therefore simplified.

The SaLinA library is currently very light (300 lines of code), but the decoupling and structuring it allows facilitate both experimentation and industrialization. Is the lightness of the library at the heart of its DNA, or do you plan to gradually develop it into a more and more tool-intensive framework?

SaLinA is more an extension of PyTorch – provided with many examples of algorithms – than a library as such. Our goal is to keep it as simple as possible, and to increase the number of algorithms using it. The interest of keeping it so simple is on one hand to keep it easy to adopt, but also to allow the use of all the tools of the PyTorch ecosystem (e.g. pytorch lightning). Thus, the production of algorithms developed in SaLinA can now be done using the same tools as the production of PyTorch models, which allows us to focus on the development of algorithms without creating a new ecosystem whose maintenance would be complicated.

The development axes are twofold: on the one hand, the development of complex agents (e.g. optimized transformers, agents interacting with real users, etc.), and on the other hand, the implementation of multiple algorithms allowing to reproduce the state of the art, but also being able to serve as a basis for the construction of new models.

Many thanks to Ludovic Denoyer and to FAIR for their answers.

More information: https: //arxiv.org/pdf/2110.07910.pdf and https://github.com/facebookresearch/salina

Translated from PyTorch : La librairie SaLinA expliquée par Ludovic Denoyer (FAIR)