Officially unveiled last April, the Wafer Scale Engine V2 (included in the Cerebras CS-2 system) is the latest processor designed by Cerebras, an American semiconductor manufacturer. Always in a will to go further and to be able to put in network its processors, the firm announces to have associated up to 192 CS-2 systems. If a Wafer Scale Engine V2 embarks 850 000 cores, this so particular association comprises nearly 163 million cores.

The Wafer Scale Engine V2, the chip with 850,000 cores engraved on 7 nm

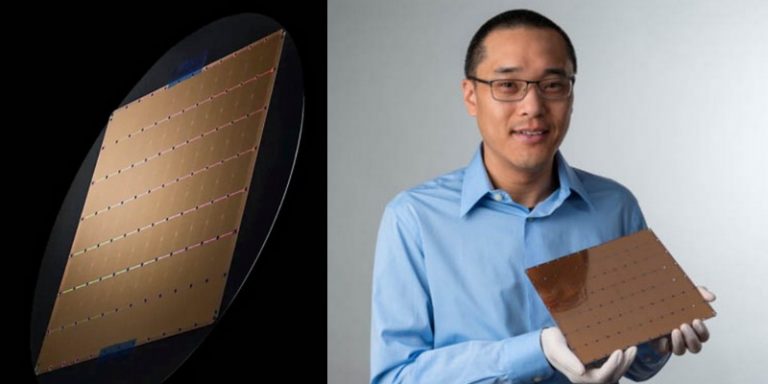

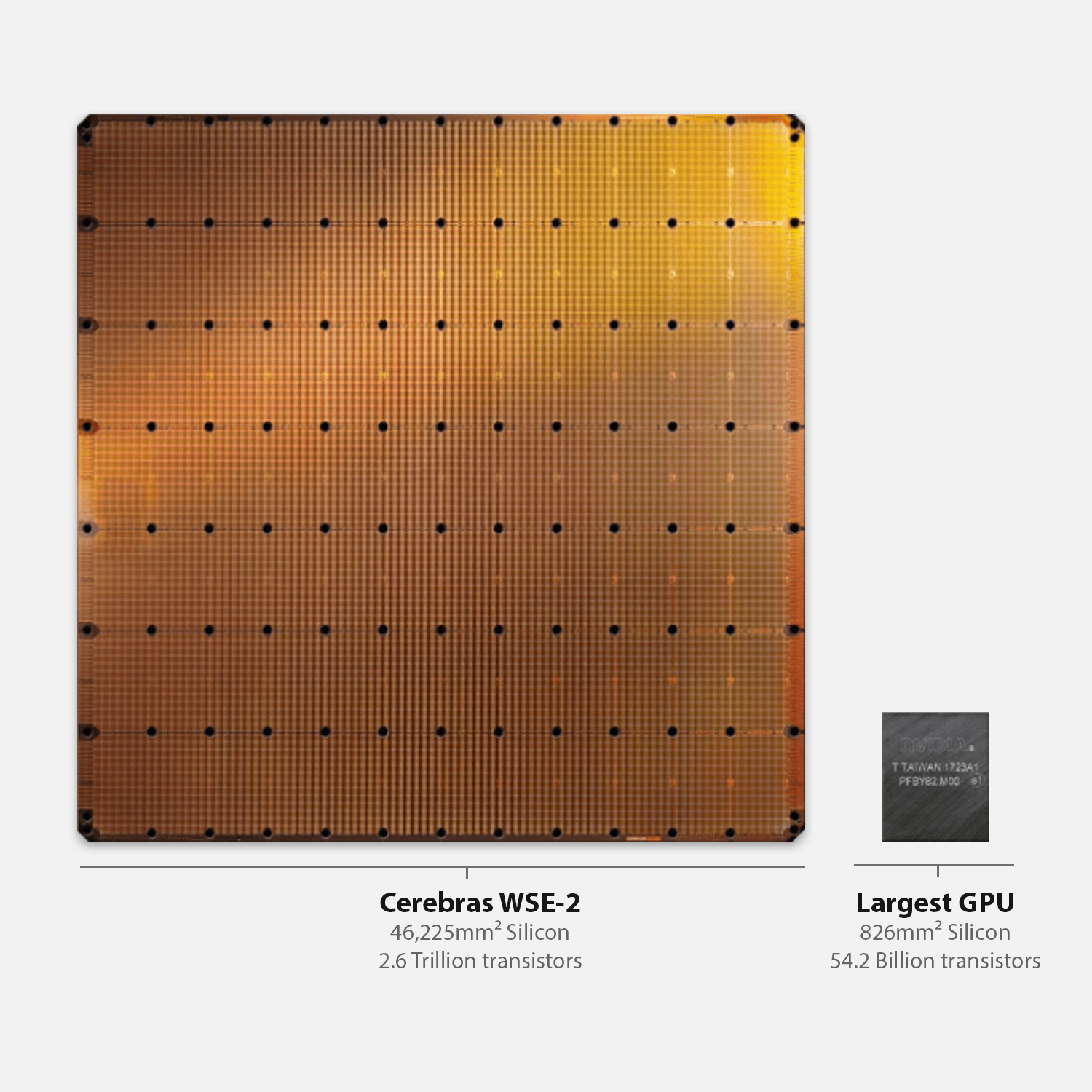

Cerebras’ Wafer Scale Engine V1 measured 46,225 mm2 (a square of 21.5 cm), 1.2 trillion transistors and 400,000 cores. In April 2021, Cerebras announced that it had designed the Wafer Scale Engine V2, with a 7 nm etch, which allowed the company, despite an identical die area (21.5 cm x 21.5 cm) to pack 850,000 cores and 2.6 trillion transistors.

There is 40 GB of on-board memory (SRAM), offers a memory bandwidth of 20 Po/s and benefits from a 220 Pb/s interconnect. The I/O includes 12 GbE ports for a bandwidth of 1.2 Tb/s. A single system consumes up to 23 kW. To operate, the company integrates the processor into the Cerebras CS-2 system, shown below:

As for the architecture, the cores are distributed in the form of tiles, which are themselves scattered within a multitude of small dies. Each of the tiles has its own SRAM memory, FMAC data path, router and tensor control module. Each of the cores is interconnected using a 2D matrix.

192 Cerebras CS-2s networked to deliver unprecedented computing power

According to Cerebras, a single Cerebras CS-2 system is sufficient for one layer of a 120 trillion-parameter neural network (compared to the average of around one trillion parameters). If they wish, institutions and companies will be able to create a huge supercomputer, since the semiconductor manufacturer has managed to put together 192 CS-2 systems working in tandem.

This results in a network of 162 million cores dedicated to high-performance computing, which offers a complexity of 120 trillion potential connections. In comparison, our brain is “satisfied” with 100 trillion synaptic connections, or 20 trillion less. It should be noted, however, that our brain is much less energy-intensive.

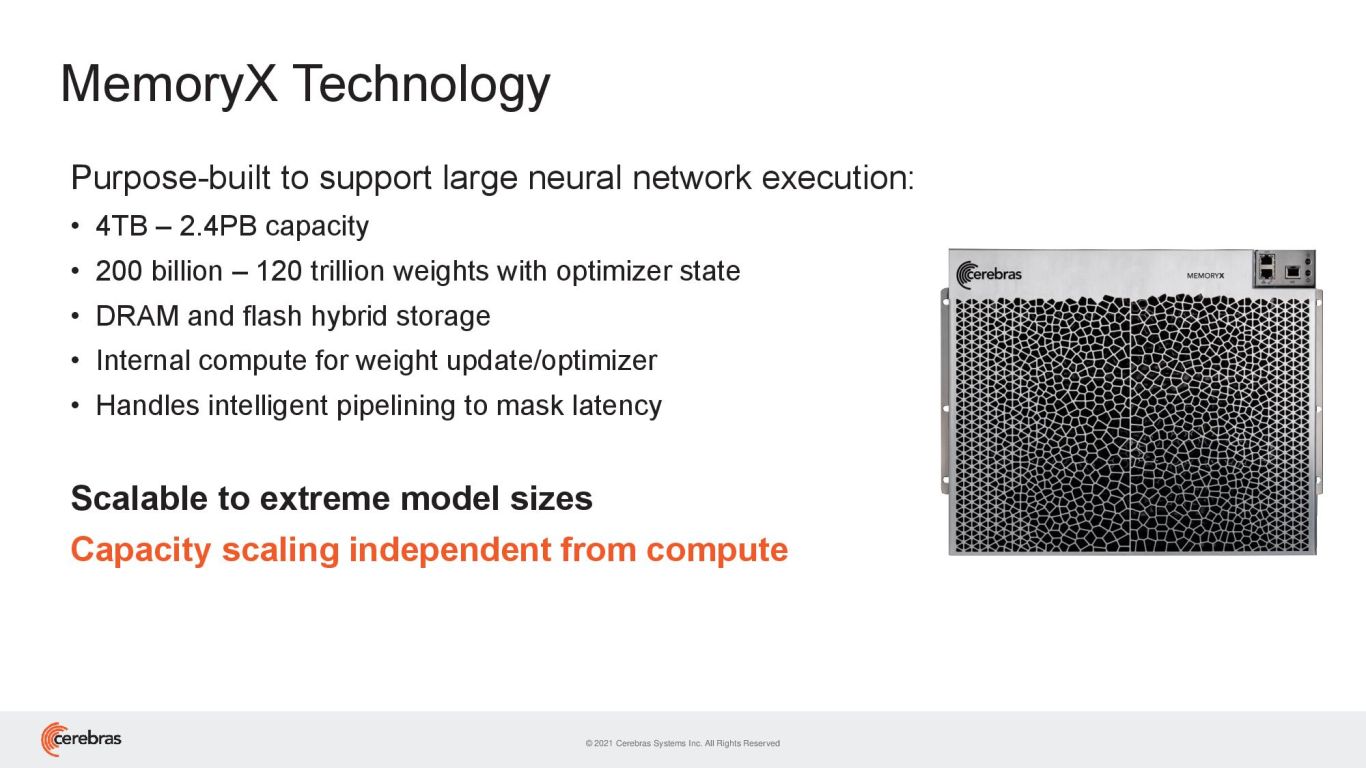

To build a network like this, Cerebras built a parallelism system by data distribution to offer a linear performance scaling. Thus, the model parameters are stored in MemoryX blocks that can hold between 4TB and 2.4PB of data. Each block drives up to 32 CS-2 Cerebras and incorporates RAM and Flash memory to keep models and parameters off the processors, freeing up the internal memory of each chip to run the calculations.

This network could allow models such as GPT-3 to be trained in record time, whereas currently it takes weeks or months.

Translated from 192 systèmes Cerebras CS-2 mis en réseau pour mettre 163 millions de cœurs au service de l’intelligence artificielle