Deep reinforcement learning (deep RL), a combination of RL and deep learning, is a common approach for learning robots in simulated environments. Researchers at the University of California, Berkeley, took advantage of recent developments in the “Dreamer” world model and used real-world RL reinforcement to train robots without a simulator or demonstration. Their study, “DayDreamer: World Models for Physical Robot Learning,” was published in ArXiv.

Training an AI in a virtual environment is a much simpler approach than in the real world, it saves money, time, avoid breakage of prototypes… But the transition from simulation to the real world is perceived as very difficult because the modeling of a virtual environment cannot be perfectly faithful to the reality of our physical world.

The Dreamer world model

Danijar Hafner, Timothy Lillicrap, Jimmy Ba and Mohammad Norouzi presented the “Dreamer” algorithm at ICLR 2020. This RL agent is able to plan future actions based on those performed via latent imagination. Recently, the algorithm outperformed pure reinforcement learning in video games from brief interactions in a world model. Researchers at UC Berkeley wanted to know if Dreamer could facilitate faster learning on physical robots.

Learning without a simulator

Danijar Hafner, who presented Dreamer in 2020, is also part of the UC Berkeley team. An AI PhD student in Toronto, he is currently in the lab of Pieter Abbeel, another team member, as well as Philipp Wu, Alejandro Escontrela and Ken Goldberg. The study uses recent developments in the Dreamer world model to train a range of robots through online reinforcement learning in the real world, without a simulator or demonstration.

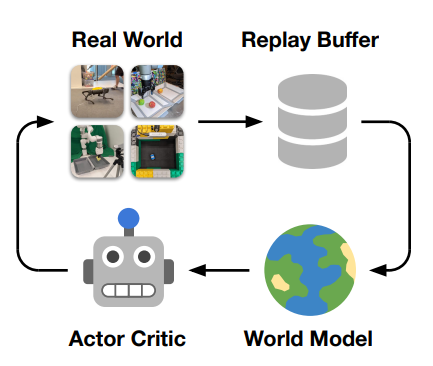

The figure above illustrates how Dreamer builds a world model from a replay buffer of previous experiences, learns behaviors from imagined deployments in the latent space of the world model, and continuously interacts with the environment to explore and refine its behaviors.

The team’s goal is to push the boundaries of real-world robot learning and provide a robust platform for future research that will demonstrate the benefits of world models for such learning.

The researchers applied Dreamer to 4 robots, demonstrating that real-world learning was possible without introducing new algorithms. The tasks were varied, taking place in different action spaces, sensory modalities and reward structures:

- They trained a quadruped to roll on its back, stand up and walk in just one hour. They then pushed the robot and found that Dreamer adapts within 10 minutes to resist or quickly roll over and stand up.

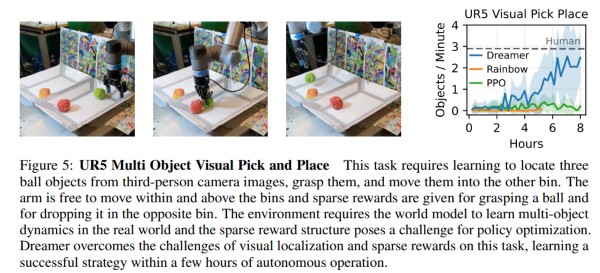

- Visual Pick and Place (above): On two different robotic arms, Dreamer learns to pick and place multiple objects directly from camera images and scattered rewards, approaching human performance.

- On a wheeled robot, Dreamer learns to navigate to a goal solely from camera images, automatically resolving ambiguity about the robot’s orientation.

By using the same hyperparameters in all experiments, they were able to see that Dreamer is able to learn online in the real world, establishing a solid foundation.

The researchers are releasing open source software infrastructure for all of their experiments, which supports different action spaces and sensory modalities, providing a flexible platform for the future of world model research for real-world robot learning.

Article source:

DayDreamer: World Models for Physical Robot Learning

Philipp Wu, Alejandro Escontrela, Danijar Hafner, Ken Goldberg, Pieter Abbeel.

Translated from DayDreamer : former les robots dans le monde réel grâce à l’apprentissage par renforcement en ligne