France has experienced this year very important heat waves, including one very early. With climate change, this phenomenon may occur every year, increasing the risk of forest fires, which was unfortunately observed this summer. The IRT Saint Exupéry is studying technological solutions based on AI and artificial neural networks to detect forest fires as early as possible from orbiting satellites. It presented the results of this work in the paper presented at CNIA 2022: “Model and dataset for multi-spectral detection of forest fires on board satellites” and decided to make the model available to the scientific community to strengthen research on this topic.

This work was carried out as part of the “Autonomous and Reactive Image Chain” (CIAR) project, which studies technologies for deploying AI for image processing on embedded systems (satellites, delivery drones, etc.). It is conducted at IRT Saint Exupéry in partnership with Thales Alenia Space, Activeeon, Avisto, ELSYS Design, MyDataModels, Geo4i, INRIA and LEAT/CNRS.

Following a call for applications, the project was selected to carry out in-orbit demonstrations as part of the OPS-SAT mission. This nano-satellite, to be launched on December 18, 2019, is only 30 cm high and is equipped with an Intel Cyclone-V computer (System On Chip or SoC), as well as a small camera with a sampling step of 50 meters. The CIAR team contributed to the development of AI solutions embedded in the satellite.

On March 22, 2021, it achieved two space premieres:

- The remote update, from a ground station, of an artificial neural network embedded on a satellite.

- The use of an FPGA (Field-Programmable Gate Array) to deploy and use this neural network in orbit.

Model and dataset for multi-spectral forest fire detection on board satellites

It is estimated that the number of forest fires could increase by +50% by 2100. Houssem Farhat, Lionel Daniel, Michaël Benguigui and Adrien Girard were interested in remote sensing of these fires on board a satellite in order to raise early warnings directly from space.

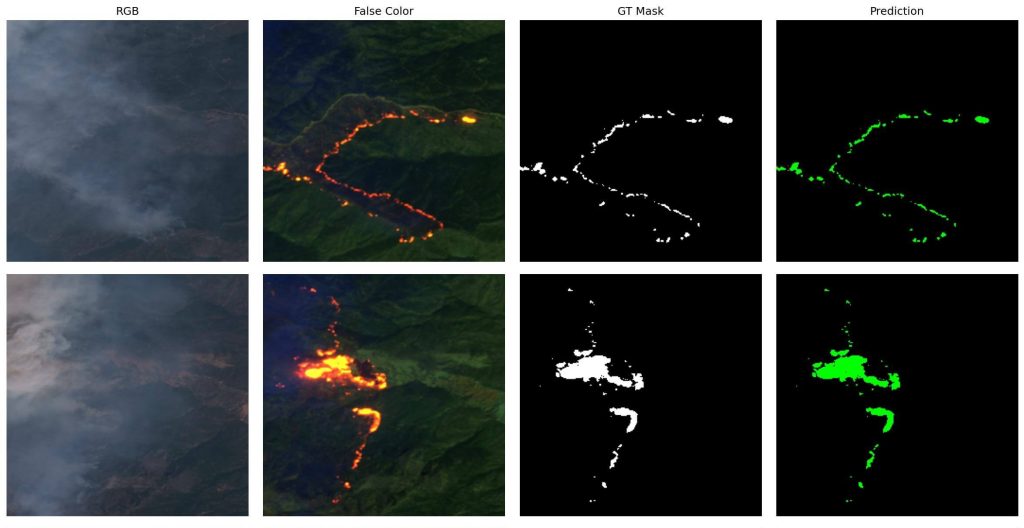

They trained a UNet-MobileNetV3 neural network to segment a set of 90 multispectral images from Sentinel-2, a series of Earth observation satellites of the European Space Agency developed under the EU-funded Copernicus program. They annotated these images semi-automatically and then verified them manually.

The Sentinel-2 images were downloaded from the Sentinel-Hub OGC WCS API, and their GSD (Ground Sampling Distance, in meters per pixel) ranged from 40 to 80m. The images were then sliced into 256x256px patches and distributed within three different datasets in order to train, validate and test an AI achieving an IoU performance of 94%.

The network was then deployed on a GPU that could be embedded in a low earth orbit satellite.

Version 2 (constant GSD equal to 20m):

A second version of the dataset was produced after the paper published at CNIA 2022. The Sentinel-2 images were downloaded from the Copernicus service, at maximum resolution, and at L1C processing level. The team recommends the use of this second version for any future research, since all patches in the dataset here have a constant GSD equal to 20m.

The IRT has also decided to publish the dataset :

- Download the two versions of the dataset here

- CNIA 2022 results (based on S2WDS Version 1) are reproducible via the available code here

- A similar code for S2WSD Version 2 (after the CNIA 2022 paper) is available here

Paper sources: Model and dataset for multi-spectral detection of forest fires

on board satellites, National Conference on Artificial Intelligence

Artificial Intelligence 2022 (CNIA 2022), Jun 2022, Saint-Etienne, France. ffhal-03866412.

Authors:

- H. Farhat, Saint-Exupéry Institute of Technological Research, AViSTO

- L. Daniel, Saint-Exupéry Institute of Technological Research

- M. Benguigui, Technological Research Institute Saint-Exupéry, Activeeon

- A. Girard, Saint-Exupéry Institute of Technological Research

Translated from Projet CIAR : le deep learning pour détecter les feux de forêt depuis les satellites