Last month, a team of researchers at the University of Georgia conducted a studyshowing that people were more likely to trust opinions given by an algorithm than those given by a crowd of individuals. More recently, the National Institute of Standards and Technology (NIST) has proposed a method for assessing how much trust users can place in AI models. The question addressed in this study is: when do we decide to trust or not trust recommendations made by a machine?

A study to find out if the trust given by humans to machines is measurable

This question is the main issue of a project conducted by the NIST in order to learn more about how humans trust AI systems. Their research resulted in a 23-pagepaper by Brian Stanton of the NIST Information Technology Laboratory and Theodore Jensen, a researcher at the University of Connecticut and a computer scientist at NIST. The study is part of a larger NIST initiative to better understand trusted AI models.

To answer this question, Brian Stanton first asked whether the trust that humans give to AI systems is something measurable or quantifiable. And if it is, how can it be measured appropriately and accurately. This is what he discusses in his statements:

“There are many factors built into our decisions about trust. It’s how the user thinks, feels about the system, and perceives the risks associated with using it.”

An initial research phase to understand the mechanisms of trust

With the help of Theodore Jensen, they exploited scientific articles and research already carried out around trust and relied on two lines of work:

- First, they focused on analyzing the integral role of trust in human history to understand how it has shaped our cognitive processes.

- With this first step, they were able to turn their attention to the trust challenges uniquely associated with AI, whose capabilities often go beyond those of humans.

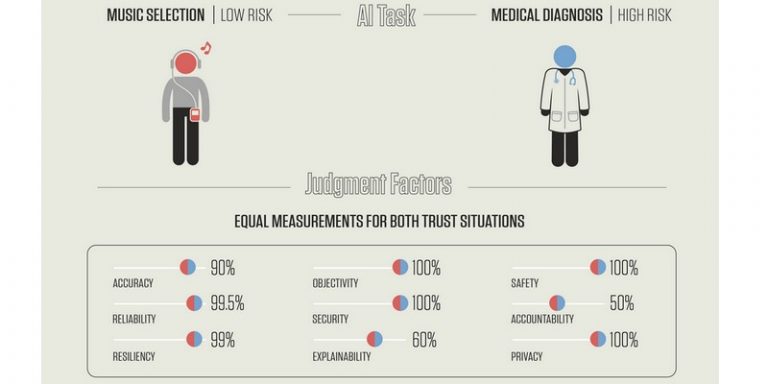

From this research, they proposed a list of nine factors that influence the decision making process of a human granting -or not- a certain trust to an AI model:

- accuracy

- reliability

- resilience

- objectivity

- safety

- understanding

- safety

- accountability

- privacy.

The paper outlines each of these characteristics and shows how a person evaluates an AI system based on one, several or all of the factors.

The factors are more or less influential depending on the AI model

Let’s start with an example. We take the “accuracy” factor in the context of an individual’s evaluation of a music selection algorithm. A person who is curious to discover new songs and to go out of his musical comfort zone will not be too careful about the accuracy of the AI model in the way it chooses a song or creates a playlist.

On the other hand, if the setting involves an AI system that diagnoses cancer, the individual will very often not trust the model if it is only 80 or 90% accurate. He will not be very inclined to want to use it, especially if the user knows that he might learn bad news later. This example shows that these factors are more or less influential depending on the tasks performed by the AI system and how it performs them.

Based on this research, Brian Stanton proposes a very specific model and asks for expert input:

“We propose a model of AI user trust. It’s all based on the research of others and the fundamental principles of cognition. For this reason, we would like to receive feedback on what work the scientific community could pursue to provide experimental validation of these ideas.”

The institution is offering the public and specifically the scientific community the opportunity to provide feedback around the paper and project through a response form. This call is open until July 30, 2021. With the opinions collected and public review, the two researchers hope to obtain more precise information to pursue their research more serenely.

For more information on trustworthy artificial intelligence, we invite you to refer to the ActuIA magazine N°3, whose central file is dedicated to this subject.

Translated from Le NIST propose l’élaboration d’une méthodologie pour évaluer notre confiance envers les modèles d’intelligence artificielle