Last month, GitHub announced the launch of its GitHub Copilotcode generation tool. This extension to Visual Studio Code leverages AI to help developers code more easily. Recently, the Free Software Foundation (FSF) expressed its opinion around this system which it considers “unacceptable and unfair” and points to the fairness, legitimacy and legality of the coding assistant.

Fair use, fairness, legitimacy, legality: FSF points the finger at GitHub Copilot

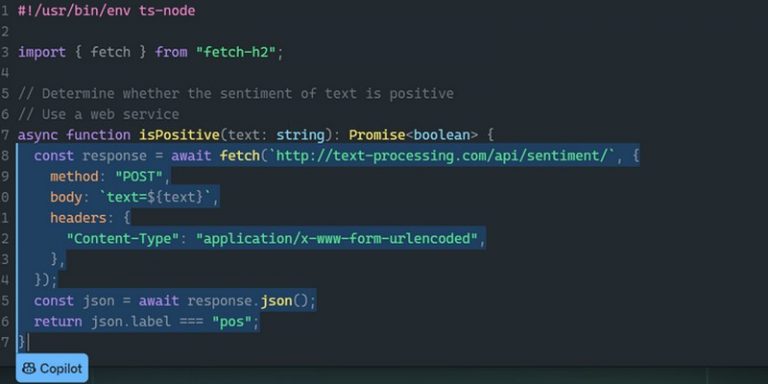

According to the FSF, the GitHub Copilot extension to Visual Studio Code poses a few legal questions that have not yet been raised. Built by GitHub in collaboration with OpenAI, the Copilot extension to Visual Studio Code, currently available as a technically limited preview, uses machine learning performance with a neural network it trains on open source software to suggest lines of code or functions to developers when they write software. It also leverages on Codex, an engine announced to be more powerful than GPT-3 in terms of code generation.

Here’s what the FSF says in its advisory around the use of Copilot:

“Developers want to know if training a neural network on their software can be considered fair use. Others who might want to use Copilot wonder if code snippets and other material copied from GitHub-hosted repositories could result in copyright infringement. And even if everything could be legally copied, stakeholders wonder if there’s something fundamentally unfair about a proprietary software company building a service from their work.”

FSF has several questions about the use of Copilot, GitHub says it’s open about it

For the FSF, there are several questions that need to be answered that, as of now, have not been answered:

- Does training Copilot on public repositories infringe copyright? Can it be considered fair use?

- To what extent can Copilot’s output give rise to claims of infringement of GPLed works?

- Can developers using Copilot comply with free software licenses such as the GPL?

- How can developers ensure that their copyrighted code is protected from infringement by Copilot?

- If Copilot generates code that infringes on an open source licensed work, how can the copyright holder know about the infringement?

- Is a trained AI/ML model protected by copyright? Who owns the copyright?

- Should organizations like the Free Software Foundation advocate for a change in copyright law related to these issues?

GitHub responded to FSF by stating that the firm was open on all issues. Here are their words:

“This is a new space, and we are eager to engage with developers on these topics and help the industry establish appropriate standards for training AI models.”

For its part, the foundation is offering anyone interested in the topic the opportunity to submit a white paper that is no more than 3,000 words in length, contains no information that compromises the anonymity of the author(s) so that it can be sent to reviewers, and is written in English if possible. All information is available here.

Translated from La Free Software Foundation pointe du doigt GitHub Copilot qu’elle juge inacceptable et injuste