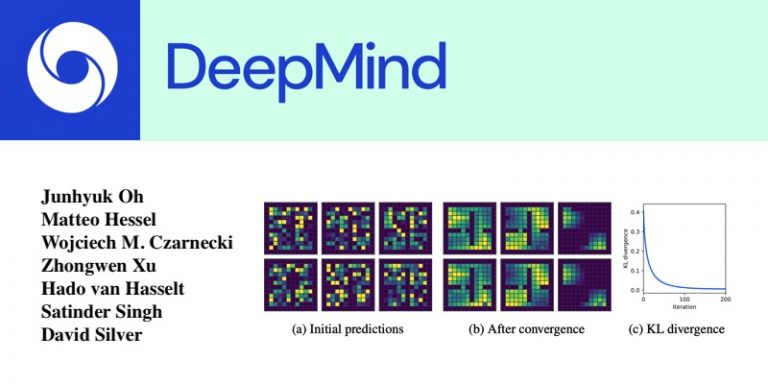

DeepMind researchers presented on July 17th in an article on Arxiv a study on reinforcement learning algorithms. Junhyuk Oh, Matteo Hessel, Wojciech M. Czarnecki, Zhongwen Xu, Hado van Hasselt, Satinder Singh and David Silver worked on a solution for generating reinforcement learning (RL) algorithms that interact with environments to know what to predict and how to learn it.

The research team explained in their paper that the algorithms had been tested on Atari video games and the results were conclusive.

“Reinforcement learning algorithms update an agent’s parameters according to one of many possible rules, discovered manually over years of research. Automating the discovery of update rules from the data could allow the development of more efficient algorithms, or algorithms better adapted to specific environments.

Although there have been previous attempts to address this significant scientific challenge, it remains to be seen whether it is possible to discover alternatives to the fundamental concepts of LR such as value functions and learning by time difference.

This paper presents a new meta-learning approach that has led to the discovery of a complete update rule that includes both ‘what to predict’ (e.g., value functions) and ‘how to learn from it’ (e.g., bootstrapping) by interacting with a set of environments. The result of this method is a LAN algorithm that we call the Learned Policy Gradient (LPG). Empirical results show that our method discovers its own alternative to the concept of value functions. Moreover, it discovers a bootstrap mechanism to maintain and use its predictions. Surprisingly, when trained only on toy environments, the LPG effectively generalizes to complex Atari games and achieves non-trivial performances. This shows the potential for discovering general purpose LAN algorithms from data.”

In their experiments, the researchers used complex Atari games including Tutankham, Breakout and Yars-revenge. They believe that this first trial is the starting point for promising new experiments on reinforcement learning algorithms. “The proposed approach has the potential to significantly accelerate the discovery process of new reinforcement learning algorithms by automating the discovery process in a data-based manner. If the proposed research direction is successful, it could shift the research paradigm from the manual development of LR algorithms to the construction of an appropriate set of environments for the resulting algorithm to be effective.

In addition, the proposed approach can also be used as a tool to help LAN researchers develop and improve their manually developed algorithms. In this case, the proposed approach can be used to provide information on what a good update rule looks like based on the architecture provided by the input researchers, which could speed up the manual discovery of LR algorithms.

On the other hand, because of the data-driven nature of the proposed approach, the resulting algorithm can capture unintended biases across learning environments. In our work, we do not provide domain-specific information, with the exception of rewards for algorithm discovery, which makes it difficult for the algorithm to capture biases in training environments. However, more work is needed to eliminate biases in the discovered algorithm in order to avoid potential negative results”.

Translated from DeepMind présente une solution de génération automatique d’algorithmes d’apprentissage par renforcement