In a BBC article, a software engineer claimed to have installed cameras with facial recognition in police stations in China’s Xinjiang province. The software, which detects emotions through artificial intelligence, is said to be being tested on the Uighur people in a move that raises fears of further escalation of persecution. The Chinese embassy in London would not give any further information on the subject, while affirming that the political and social rights of all ethnic groups in the country are guaranteed.

BBC interview with software engineer involved in the project

A software engineer involved in the development of a full-scale test of an emotion recognition system in Xinjiang spoke to the BBC on condition of anonymity. He said he feared for his safety and did not mention the company he worked for.

The software engineer was quoted as saying to the BBC:

The Chinese government uses Uyghurs as test subjects for various experiments, just as rats are used in laboratories. We placed the emotion detection camera 3 meters away from the subject. It is similar to a lie detector, but uses much more advanced technology.

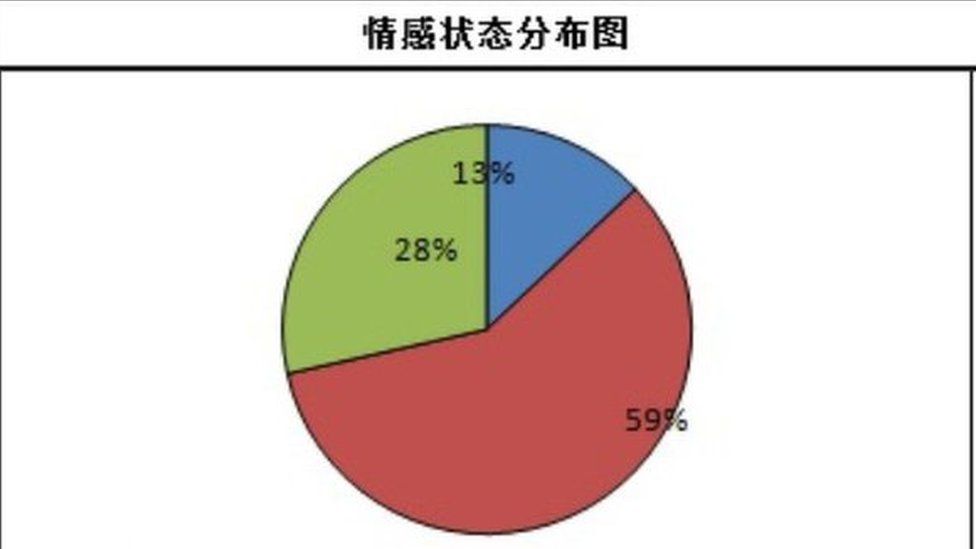

He also reportedly provided the BBC with evidence showing that the AI system is trained to detect and analyse the slightest facial expression of an individual. These are analysed and a pie chart is created, with the red part of the chart corresponding to an anxious or negative state of mind.

According to the engineer quoted by the BBC, the software would be used to judge Uyghurs automatically, without credible evidence.

Cliquez pour tweeterFor Sophie Richardson, China director of Human Rights Watch, the practice is extremely shocking:

It’s not just shocking because these people are being reduced to graphics, it’s shocking because these people are in conditions of coercion, they’re under enormous pressure, they’re logically nervous, and this is taken as an indication of guilt. I think this is extremely problematic.

Almost 12 million Uyghurs live in Xinjiang. The region is known to have “re-education centres” called high-security detention camps where up to one million Uyghurs are reportedly held. The citizens of this province are monitored daily, as the Chinese government considers that there are separatist groups wishing to create their own state in Xinjiang and that terrorist attacks that have killed hundreds of people have already taken place in the region.

The use of artificial intelligence in China and ethical issues

Nearly 400 million: that is the number of surveillance cameras in China. This is half of the number deployed worldwide. The country has a large number of intelligent cities, the most striking example of which is Chongqing: according to a BBC journalist, every move is scrutinized by the government thanks to these systems, from the elevator you use to leave your apartment to the taxi you use to get from point A to point B.

The Chinese embassy in London did not wish to answer questions from the British media regarding the engineer’s comments, but said:

“Political, economic and social rights and freedom of religious belief in all ethnic groups in Xinjiang are fully guaranteed. People live in harmony regardless of their ethnicity and enjoy a stable and peaceful life without any restrictions on personal freedom.”

She also clarified that she had no knowledge of the existence of such programs and formally stated “there is no facial recognition technology to analyze Uyghurs.”

Translated from Chine : Une IA serait utilisée pour analyser les émotions des ouïghours sous contrainte