Researchers at Amazon, two professors at the University of California Computer Science teamed up with Meta’s chief scientist to conduct a study on machine learning and presented an end-to-end noise-tolerant integrated learning framework: PGE. This framework allowed them to leverage both textual information and the graphical structure of product knowledge (PK) graphs and import integrations for error detection. The tests conducted demonstrated the effectiveness of PGE. The study entitled: ” Robust Product Graph Embedding Learning for Error Detection”was published on Amazon Science.

Xian Li, Yifan Ethan Xu, researchers at Amazon, Xin Luna Dong, chief scientist at Meta, Yizhou Sun and Kewei Cheng, professors at UCLA are the authors of this study.

More and more potential customers are turning to e-commerce sites such as Amazon, eBay and Cdiscount for their purchases, and the pandemic has amplified this trend. These sales sites have, for their part, made greater use of product knowledge graphs (PG) to improve product research and provide recommendations.

Product knowledge graphs

A PG is a knowledge graph (KG) that describes the values of product attributes and is built from product catalog data. In a PG, each product is associated with several attributes such as product brand, product category and other information related to product properties such as flavor and ingredient. Different from traditional KGs, where most triples are presented in the form of (head entity, relationship, tail entity), the majority of triples in a PG have the form of (product, attribute, attribute value), where the attribute value is a short text e.g., “Brand A Tortilla Chips Spicy Queso, 6 – 2 oz bags”, flavor, “Spicy Queso”. Attribute triples are a type of triple.

Retailers provide product data and this data inevitably contains many types of errors, including contradictory, erroneous or ambiguous. When such errors are ingested by a PG, they lead to poor performance. Since manual validation is impossible in a PG due to a large number of products, an automatic validation method was needed.

Knowledge graph integration (KGE) methods are relevant in learning efficient representations for multi-relational graph data and have shown promising performance in error detection (i.e., determining whether a triplet is correct or not) in KG. Unfortunately, for existing KGs, embedding methods cannot be directly used to detect errors in a PG due to rich textual information and noise.

The Product Graph Embedding (PGE) developed by the team

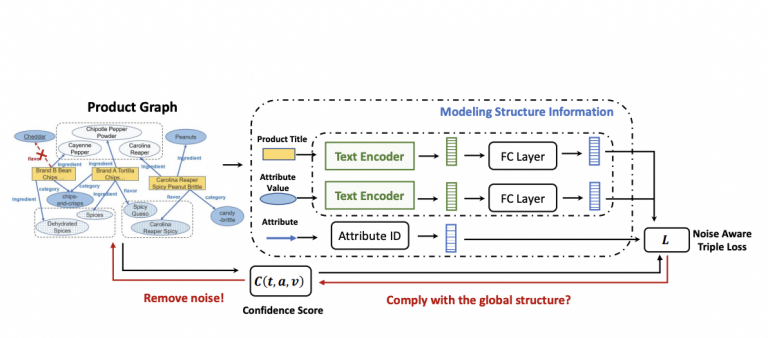

The team therefore had to answer this challenging research question: “how to generate embeddings for a text-rich, error-prone knowledge graph to facilitate error detection?” It presents the new integrated learning framework, robust ” Product Graph Embedding “, to learn efficient embeddings for such knowledge graphs. For this framework, the researchers proceeded in two steps:

- The integrations seamlessly combined signals from the textual information in the attribute triples and the structural information in the knowledge graph. The researchers applied a CNN encoder to learn the textual representations for product titles and attribute values, and then integrated them into the triplet structure to capture the underlying patterns in the knowledge graph.

- In a second step, the researchers incorporated a noise-sensitive loss function to prevent noisy triples in the PG from distorting the integrations during training.

For each positive instance in the training data, the framework predicts the consistency of a triplet with other triples in the KG and underweights an instance if for the confidence of its correctness is low. PGE is able to model both textual evidence and graphical structure, and is robust to noise. This model is generic and scalable, and is applicable not only in the product domain, but also excels in other domains such as Freebase KG.

Article source: https://assets.amazon.science/51/23/25540bf446749098496f5d28e189/pge-robust-product-graph-embedding-learning-for-error-detection.pdf.